Facebook unveils Big Basin, new server geared for deep learning

Facebook on Wednesday unveiled Big Basin, its latest GPU server geared for deep learning. Like its predecessor Big Sur, its design will be open sourced through the Open Compute Project.

Compared to Big Sur, the new server allows Facebook to train machine learning models that are 30 percent larger, thanks to two factors: an increase in memory from 12 GB to 16 GB, as well as the availability of greater arithmetic throughput.

Also: Facebook rolls out Bryce Canyon, its next-gen storage platform

"Right now we use AI to recognize people and objects in photos," Kevin Lee, technical program manager for Facebook, explained to ZDNet ahead of the Open Compute Summit. "Chances are if you use Facebook, you're using AI models that have been trained with Big Sur." With Big Basin, he continued, "The aim is to provide a lot more compute power to train more and more complex AI models, creating a new server that will fit even better with our needs."

Investments in AI fit into the 10-year vision for Facebook that CEO Mark Zuckerberg laid out last year. Along with improved tagging on its platforms, Facebook has started using AI for a variety of products and features, such as the ability to vocally describe photos to visually impaired users and to identify potential "suicide or self injury" posts.

"We're trying to include the AI experience within all of our apps in Facebook," Lee said.

To step up those efforts, Lee's team sought out feedback from other Facebook teams -- Applied Machine Learning (AML), Facebook AI Research (FAIR) and infrastructure - on how to improve Big Sur.

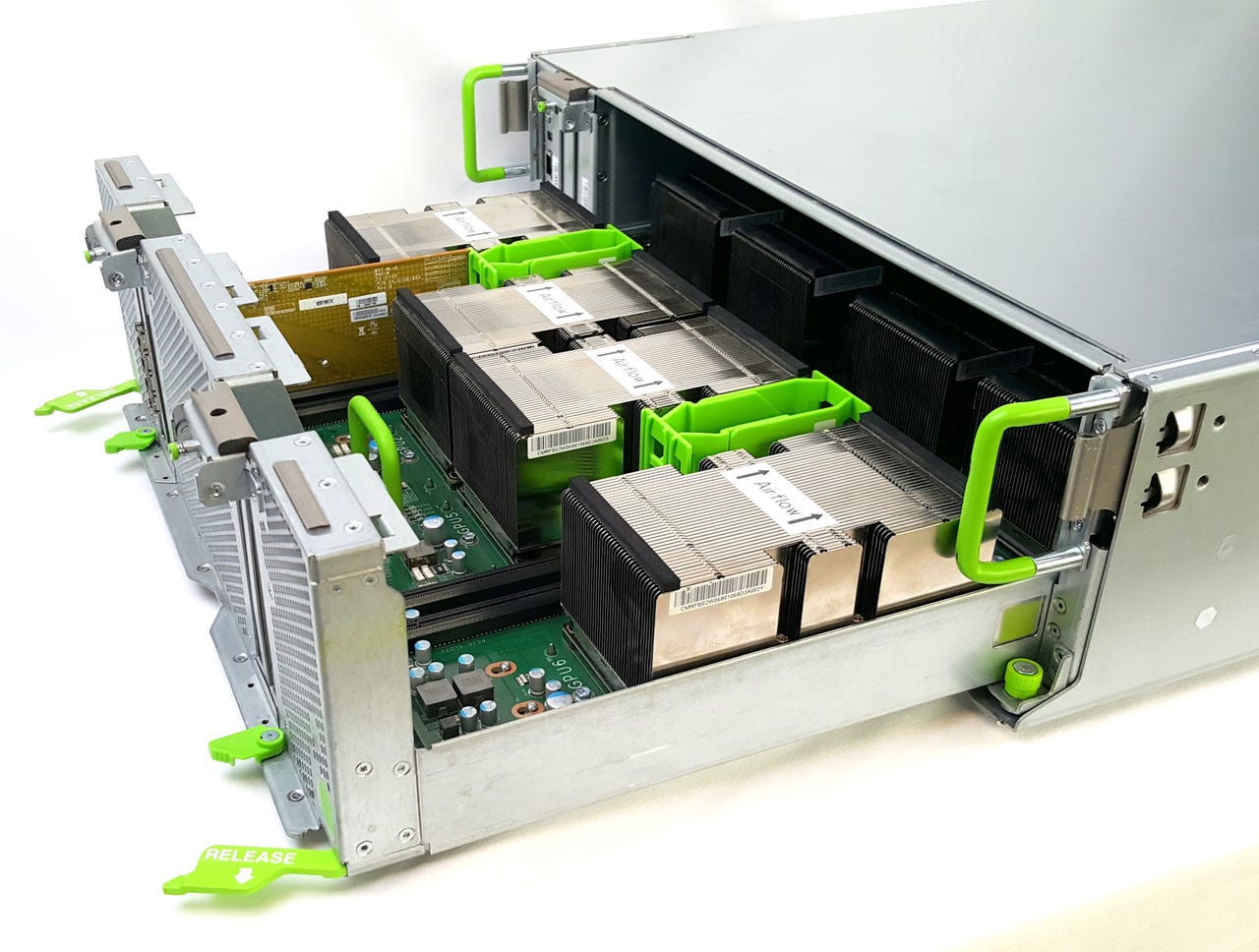

The improvements start with the more powerful GPUs. Big Basin features eight Nvidia Tesla P100 GPU accelerators. The system also utilizes Nvidia's interconnect (a system to transmit information between a CPU and GPU) to push even more data between GPUs.

And unlike Big Sur, Big Basin has a modular, disaggregated design. This allows Facebook to scale up various hardware or software components independently. The design also improves serviceability: By splitting the accelerator tray, inner chassis and outer chassis, repairs are easier and lead to less down time. The server was also designed for better thermal efficiency, with GPUs now closer to the cool air being drawn into the system.

By open sourcing the design, Lee said, Facebook hopes to see others improve on it and foster greater collaboration on building complex AI systems.