Google says 'exponential' growth of AI is changing nature of compute

The explosion of AI and machine learning is changing the very nature of computing, so says one of the biggest practitioners of AI, Google.

Google software engineer Cliff Young gave the opening keynote on Thursday morning at the Linley Group Fall Processor Conference, a popular computer-chip symposium put on by venerable semiconductor analysis firm The Linley Group, in Santa Clara, California.

Said Young, the use of AI has reached an "exponential phase" at the very same time that Moore's Law, the decades-old rule of thumb about semiconductor progress, has ground to a standstill.

"The times are slightly neurotic," he mused. "Digital CMOS is slowing down, we see that in Intel's woes in 10-nanometer [chip production], we see it in GlobalFoundries getting out of 7-nanometer, at the same time that there is this deep learning thing happening, there is economic demand." CMOS, or complementary metal-oxide semiconductor, is the most common material for computer chips.

Also: Google preps TPU 3.0 for AI, machine learning, model training

As conventional chips struggle to achieve greater performance and efficiency, demand from AI researchers is surging, noted Young. He rattled off some stats: The number of academic papers about machine learning listed on the arXiv pre-print server maintained by Cornell University concerning is doubling every 18 months. And the number of internal projects focused on AI at Google, he said, is also doubling every 18 months. Even more intense, the number of floating-point arithmetic operations needed to carry out machine learning neural networks is doubling every three and a half months.

All that growth in computing demand is adding up to a "Super Moore's Law," said Young, a phenomenon he called "a bit terrifying," and "a little dangerous," and "something to worry about."

"Why all this exponential growth?" in AI, he asked. "In part, because deep learning just works," he said. "For a long time, I spent my career ignoring machine learning," said Young. "It wasn't clear these things were going to take off."

But then breakthroughs in things such as image recognition started to come quickly, and it became clear deep learning is "incredibly effective," he said. "We have been an AI-first company for most of the last five years," he said, "we rebuilt most of our businesses on it," from search to ads and many more.

The demand from the Google Brain team that leads research on AI is for "gigantic machines" said Young. For example, neural networks are sometimes measured by the number of "weights" they employ, variables that are applied to the neural network to shape its manipulation of data.

Whereas conventional neural nets may have hundreds of thousand of such weights that must be computed, or even millions, Google's scientists are saying "please give us a tera-weight machine," computers capable of computing a trillion weights. That's because "each time you double the size of the [neural] network, we get an improvement in accuracy." Bigger and bigger is the rule in AI.

To respond, of course, Google has been developing its own line of machine learning chips, the "Tensor Processing Unit." The TPU, and parts like it, are needed because traditional CPUs and graphics chips (GPUs) can't keep up.

"For a very long time, we held back and said Intel and Nvidia are really great at building high-performance systems," said Young. "We crossed that threshold five years ago."

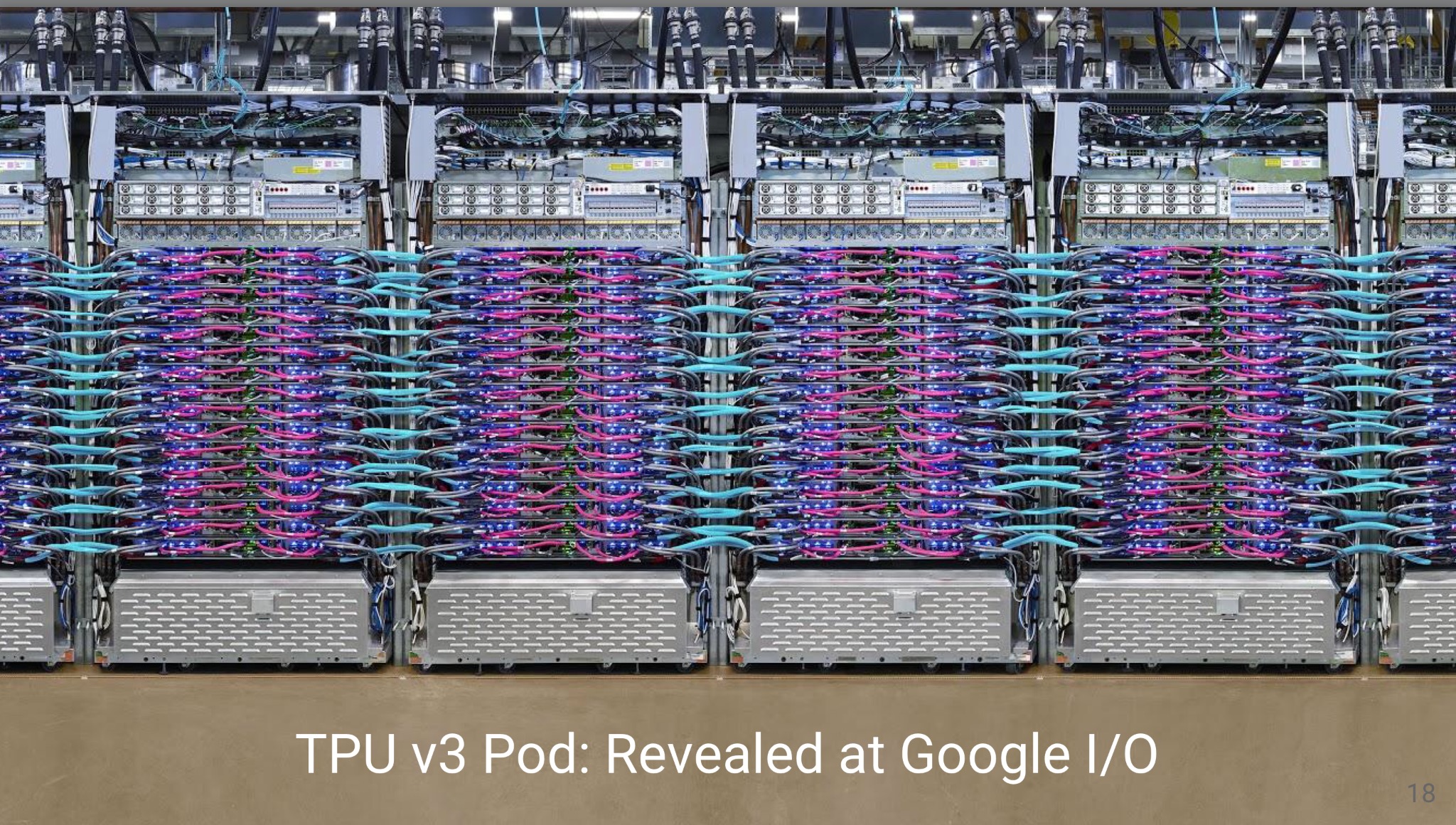

The TPU caused a commotion when it was first unveiled in 2017, boasting of performance superior to conventional chips. Google is now on its third iteration of the TPU, which it uses internally and also offers as an on-demand compute node through Google Cloud.

Also: Google announces Edge TPU, Cloud IoT Edge software

The company continues to make bigger and bigger instances of the TPU. Its "pod" configuration ties together 1,024 individual TPUs into a new kind of supercomputer, and Google intends to "continue scaling" the system, said Young.

"We are building these gigantic multi-computers, with tens of petabytes of computing," he said. "We are pushing relentlessly in a number of directions of progress, the tera-ops keep on climbing."

Such engineering "brings all the issues that come up in supercomputer design," he said.

For example, Google engineers have adopted tricks used by the legendary supercomputing outfit Cray. They combined a "gigantic matrix multiplication unit," the part of the chip that does the brunt of the work for neural network computing, with a "general purpose vector unit" and a "general-purpose scalar unit," like on the Cray. "The combination of scaler and vector units let Cray outperform everything else," he observed.

Google has developed its own novel arithmetic constructions to program the chips. Something called a "bfloat16" is a way to represent real numbers that provides for more efficiency in number crunching on neural networks. It is colloquially referred to as the "brain float."

The TPU draws on the fastest memory chips, so-called high-bandwidth memory, or HBM. There's a surging need for memory capacity in training neural networks, he said.

"Memory is way more intensive in training," he said. "People talk about hundreds of millions of weights, but it's also an issue of handling the activation" variables of a neural network.

And Google is also adjusting how it programs neural nets to make the most of hardware. "We are working a lot on data and model parallelism," with projects such as "Mesh TensorFlow," an adaptation of the company's TensorFlow programming framework that "combines data and model parallelism at pod-scale."

There were certain technical details Young shied away from revealing. He noted the company hasn't talked much about the "interconnects," the way data is moved around the chip, save to say "we have gigantic connectors." He declined to proffer more information, eliciting laughs from the audience.

Young pointed to even more intriguing realms of computing that might not be far away. For example, he suggested computing via analog chips, circuits that process inputs as continuous values, rather than as ones and zeros, could play an important role. "Maybe we will sample from the analog domain, there is some really cool stuff in physics, with analog computing, or in non-volatile technology."

He held out hope for new technology from chip startups such as those appearing at the conference: "There are some super-cool startups, and we need that work because digital CMOS is only going to take us so far; I want those investments to take place."

Previous and related coverage:

Early AI adopters report big returns

New study shows artificial intelligence technology is paying off, but organizations face challenges.

Oracle introduces new enterprise digital assistant

Going beyond typical chatbots built for a single purpose, the Oracle Digital Assistant can be trained to support domain skills from multiple applications

AI delivering returns for enterprise early adopters, but not industries created equal

Deloitte's annual AI survey reveals a bit of realism, cybersecurity worries and a 17 percent median return on investment.

Machine learning now the top skill sought by developers

SlashData's latest survey of 20,000 developers identifies machine learning and data science are the skills to know for 2019.

What is deep learning? Everything you need to know

The lowdown on deep learning: from how it relates to the wider field of machine learning through to how to get started with it.

What is digital transformation? Everything you need to know

Digital transformation: what it is, why it matters, and what the big trends are.

AI and Internet of Things will drive digital transformation through 2020

Research study reveals IoT, AI and synchronous ledger tech (blockchain) priorities.

Related stories:

- There is no one role for AI or data science: this is a team effort

- Startup Kindred brings sliver of hope for AI in robotics

- AI: The view from the Chief Data Science Office

- Salesforce intros Einstein Voice, an AI voice assistant for enterprises

- It's not the jobs AI is destroying that bother me, it's the ones that are growing

- How Facebook scales AI

- Google Duplex worries me CNET

- How the Google Home is better than the Amazon Echo CNET

- Digital transformation: A guide for CXOs (Tech Pro Research)

- What enterprises will focus on for digital transformation in 2018

- Digital transformation: Three ways to get it right in your business